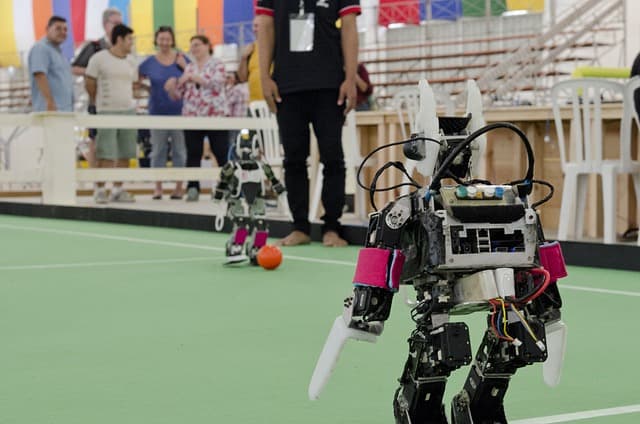

Children’s School of Robotics

Individual and group classes in programming and robotics from 5 to 17 years. Art&Ca Robotics School is a unique opportunity

About us

Art&Ca Online School is about teaching kids robotics, making new friends, and having a perspective on the world of innovation.

Courses

Members of our schools

Robotics courses

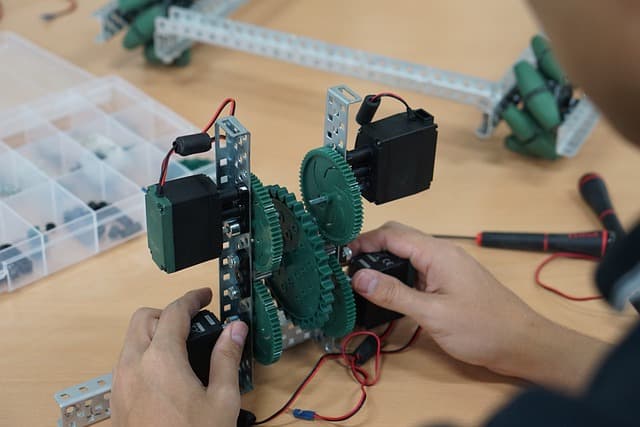

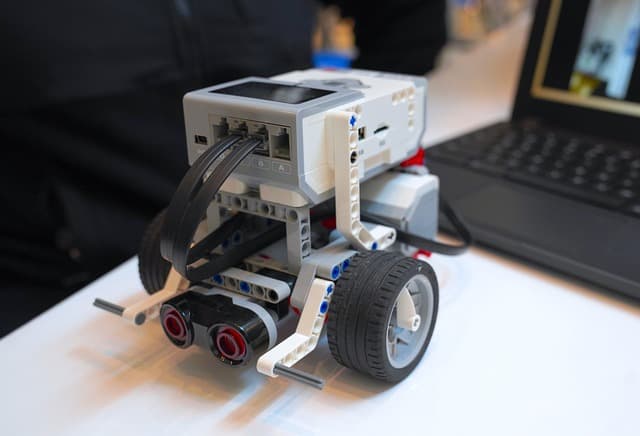

Modern methods

We use the latest technologies in teaching, children work with Lego and Arduino and create unique projects.

Individual approach

We adjust the lesson program depending on the progress of the child and create an individual training plan!

Progress Monitoring

We give your child monthly feedback on their progress. So you’ll always know how useful our lessons are

Convenient platform

All lessons are stored in a private office. We motivate your child to learn and give you gifts for their achievements!

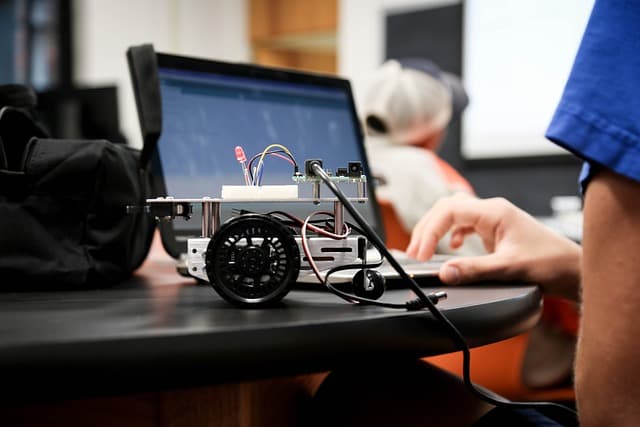

Practical Lessons

Every lesson is a small project on its own. We create interactive and rich lessons with lots of practice

Lesson Schedule

One lesson lasts 60-90 minutes. Classes are held once a week.